New Challenges around Cooling Efficiency in Data Centers

With cloud computing adoption continuing to rise, data centers are hosting an ever-larger percentage of enterprise workloads. Largely owing to the surge in the amount of internet activity, augmented by computationally intensive workload of edge computing, the Internet of Things (IoT), and automation – data center suppliers predict large cloud and internet companies to hit an hyperscale inflection point in the data center value chain. The capital investment and spending on data centers is expected to rise considerably in the next 3 to 5 years and so is the intent for optimising data center power use and achieving an overarching PUE (Power Usage Effectiveness – measurement of Data Center efficiency). In other words, contemporary data center design architecture with increasing server rack (cabinet) densities is also necessitating ‘energy efficient’ cooling. Statista proclaims the spending on data center systems is expected to amount to 227 billion U.S. dollars in 2022, a rise of 4.7% from the previous year. From a sustainability point of view, the global data center is under the scrutiny of environmental groups, policy makers and media owing to the rise in the demand for custom designed built-to-suit, and reuse-to-suit of existing data centers with higher capacities. According to the Journal of Science, data centers consumed 1% of the world’s total energy and the workload of data centres between 2010 and 2020 increased 6-fold.

From the perspective of cooling a data center, not only ASHRAE recommended conditions of temperature, humidity, air movement and air cleanliness must be kept consistent and within specific limits to prevent premature equipment failures, but as said before the cooling solution must also reduce energy usage and adopt an efficiency approach. The cooling systems, consumption of which is hovering at around 40% of the total data center energy consumption, must continue to innovate and adopt alternative design concepts in cooling.

Overview of Traditional Cooling Systems

The cooling system for a data center is influenced by the type of IT equipment & its configuration, data center service levels or tier classification, regulatory compliances, and the infrastructure strategy for current & future requirement – the deciding factor between capital expense (CAPEX) versus operational expense (OPEX) and the pressing need for a sustainable green facility.

Designing a new energy-efficient data center facility or changing an existing one to maximize cooling efficiency can be a challenging task. Strategies to reduce data center energy consumption are using common air-handlers & blowing cold air in required direction, hot-cold aisle separation with CRAC (computer room precision air-conditioners), hot-cold aisle containment with CRAC, liquid cooling and green cooling using ventilation fans to draw outside cold air for cooling or using renewable energy sources. Different cooling system designs and floor or equipment layout variations influence the cooling results and thereby, the efficiency.

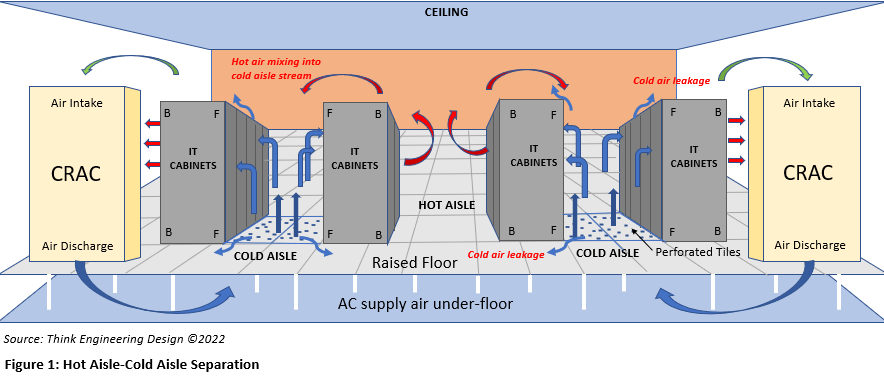

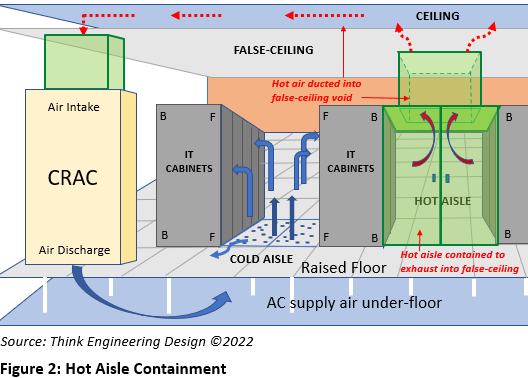

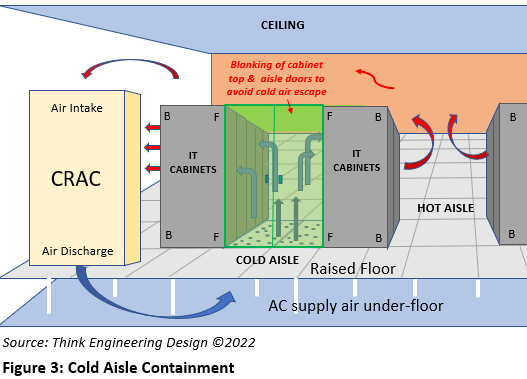

‘Air Cooling’ has been the preferred method for cooling data centers and for many years, data centers utilised raised floor systems to deliver cold air to the server racks. Complying with ASHRAE 90.4 standard, an approach to energy efficiency is laying out IT equipment racks according to the Hot Aisle–Cold Aisle Separation (Figure 1), which means aligning IT equipment racks in rows such that the cabinet fronts face the cool aisle, and exhaust is directed into the hot aisle. This type of design is a fair advancement from blowing cold air in a direction – in which mixing of hot-cold air makes the cooling system inefficient. There have been benefits in cooling efficiency for some time with the separation of aisles as it maximizes heat dissipation, however to enhance the advantage of that separation and supress recirculation and mixing of hot & cold air, vertical partition spool pieces from the IT cabinets and the hot aisle are connected to false-ceiling for absolute isolation of hot and cold air thereby preventing short-circuiting – called the hot aisle containment (Figure 2) or cold aisle blanked at cabinet level and aisle entry – called the cold aisle containment (Figure 3). To optimise the effectiveness of the strategies, Computational Fluid Dynamics (CFD) helps in modelling airflow through the IT cabinets to predict hot and cold spots in the center and decision making on where’s the best area new equipment could be installed or change in the cooling system design strategy or a complete change in infrastructure design.

Turning data centers ‘smart’ by minimizing lighting levels & installing sensors to optimise air by varying fan-speeds, intermittent operation or using economisers etc. can further improve effectiveness, but air cooling simply cannot keep up with the increased densities resulting from next-generation server workloads and at some point, the capital outlay for air cooling can no longer be justified. Another measure to counter increasing amount of server dissipated heat includes in-row cooling, but there are disadvantages of comparatively more failure points & difficult service access with in-row cooling. With high-performance computing and data volumes exploding, a breakthrough in the efficiency of cooling energy is need of the hour. However, traditional ‘air cooling’ is still a viable option and will certainly have its place in the data center.

The Evolution of Liquid Cooling

Due to their higher densities, specific heat capacities, and thermal conductivities, liquids are generally far more efficient at transferring heat than air – water for instance has 3,300 times the volumetric heat capacity of air and conducts approximately 25 times heat than air. Liquid cooling provides benefits over air cooling such as higher energy efficiency, smaller footprint, server reliability, lower total cost of ownership (TCO) and diminished equipment noise. As per the latest Uptime Institute global data center survey, the critical benchmark metric – PUE (Power Usage Effectiveness) has fallen from an average of 2.5 in 2007 to around 1.6 today. Using liquid cooling and other best practices, most built-to-suit fall between 1.2 to 1.4 and could even reach 1.06 or better. PUE has been a critical benchmark for scoring data centers efficiency and as data center managers strive to reduce resource waste and OPEX, cooling equipment suppliers have focused on improving electrical efficiency. This makes a strong case for liquid cooling, which profoundly changes the profile of data center energy consumption.

The mean average density per rack was around 8.4 kW in 2020 and is riding steadily. When rack densities are higher than 20-25 kW, deployment of direct liquid cooling and precision air-cooling becomes more relevant – efficient and economical. Current methods of liquid cooling are rear-door heat exchangers (RDHX), direct-to-chip and immersion cooling. As the name suggests, RDHX replaces the rear door of a data center rack with a liquid heat exchanger. RDHX can be used in conjunction with air-cooling systems to cool environments with mixed rack densities, but there are disadvantages of data center colocation & pipe connections to cabinets with RDHX.

Direct-to-chip cold plates sit atop the cabinet’s heat-generating components to sink heat through single-phase cold plates or two-phase evaporation units. The cold plates can remove approximately 70% of the heat generated by the equipment in the rack, leaving 30% that must be removed by air-cooling systems. Single-phase and two-phase immersion cooling systems submerge servers and other components in the rack in a thermally conductive dielectric liquid or fluid, eliminating the need for air cooling. Thus, immersion cooling maximizes the thermal transfer properties of liquid and is the most energy-efficient form of liquid cooling.

Currently, the biggest obstacle to the growth of liquid cooling market is the data center operator concern over the risks associated with moving liquid to the rack; however, integrating liquid detection technology in system components and at key locations across the piping system and with di-electric fluids the risk of equipment damage from leaks is removed.

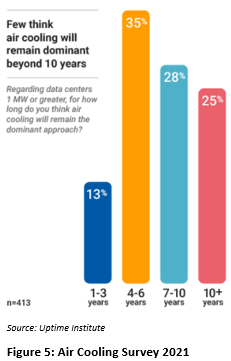

Research and Markets expects the size of the liquid cooling industry in 2026 to exceed USD 7.2 billion, with a CAGR of 14.64%. Figure 4 shows the current market concentration of liquid cooling and Figure 5 shows survey results of a group, with view of the 413 respondents on air cooling dominance in future data centers according to a recent Uptime survey.

By far, instead of looking at data center energy efficiency from a silo approach such as isolating IT equipment, cooling energy, electrical systems etc., a more integrated approach consisting of purpose-built liquid cooling platforms, components, with automated server and component maintenance is making way in data center design. Administrators are leveraging a liquid cooling plug-and-play architecture that seamlessly fits into modern data center architectures.

Few examples of environmentally conscientious efforts are Facebook’s data center in Northern Sweden using less complex ventilation fans to draw in surrounding Arctic air (free cooling) and cooling its servers, while Microsoft has been looking at innovative cooling techniques; exploring the feasibility of locating data centers capsules on the ocean floor!

Liquid cooling provides an opportunity to reduce the overall data center space for a given IT infrastructure. In a 100% liquid-cooled deployment, hot & cold isle requirements go away, and racks can be placed almost anywhere. However, liquid cooling also has some drawbacks such as higher CAPEX for new-use cases, expenditure for retrofit or redesign of old-use cases, requirement of skilled operation & maintenance personnel, but most importantly, vendor lock-in.

But liquid cooling shows promise and is here to stay.

References: https://datacenterfrontier.com/the-evolution-and-the-adoption-of-liquid-cooling-for-data-centers/ https://www.statista.com/statistics/314596/total-data-center-systems-worldwide-spending-forecast/ https://journal.uptimeinstitute.com/a-look-at-data-center-cooling-technologies/ https://www.vertiv.com/en-asia/solutions/learn-about/liquid-cooling-options-for-data-centers/